Deploying AI/ML in the cloud securely

Last month I finished a project with the Azure team that involved building a secure PaaS based solution that provides a step by step guidance and automation to allow researcher to be able to use Machine Learning to run scientific experiment securely.

Key thing here is that it's built to be /Secure/

Problem

Most times when a traditional experiments are run, an experimenter or researcher starts with a data set they got their hands on, and uses tools such as Jupyter notebook to create an R or Python based experiment. Frequently this experiment is using a simple data science VM or a workstation.

The scientist will then run the experiment (which maybe computationally intense) and turn to a cloud solutions to score the experiment in Google or Azure, and finally run Tableau or PowerBI or Excel for visualization.

Since researchers are not security experts and do not think that what they are working on should consider their work as possibly sensitive they may expose their work to many risks including:

Key thing here is that it's built to be /Secure/

Problem

Most times when a traditional experiments are run, an experimenter or researcher starts with a data set they got their hands on, and uses tools such as Jupyter notebook to create an R or Python based experiment. Frequently this experiment is using a simple data science VM or a workstation.

The scientist will then run the experiment (which maybe computationally intense) and turn to a cloud solutions to score the experiment in Google or Azure, and finally run Tableau or PowerBI or Excel for visualization.

- Data they use is frequently in Excel, or CSV format - Introducing data classification and handling issues.

- The data may contain sensitive data eg Credit card, Social security, or other PII data.

- The VM's and workstations they use are not designed to be secure by design, or worse the users will remove security protection as it might get in the way of the experiment

- The cloud service they use are premium services, and highly sought after by bad guys

- The management of the services, and operations plane is frequently left unprotected, or managed with security in mind

- The researchers share and collaborate, and may expose secrets (such as cloud account information)

- The research itself becomes the most valued asset (both the experiment, and the results) as a system gets trained, and frequently unprotected

- New AI attacks are not only against the raw data, experiment, but also the trained systems

As you can image security is a burden and frequently left for last, if ever addressed. Once a ML service is compromised it's too late to try and patch on security.

Building AI and Machine learning with a better starting point

To help scientists, and IT pro's challenge with this issue I lead a small team in developing the Azure Health Data and AI solution. This solution was built to drive awareness to the problem, and provide a quick to deploy automated solution to help illustrate what a secure AI/ML solution would look like.

The solution design allows for a user to run a deployment using simple to navigate PowerShell scripts to deploy the end to end PaaS based AI/ML solution that was build ground up with security in mind.

The Design

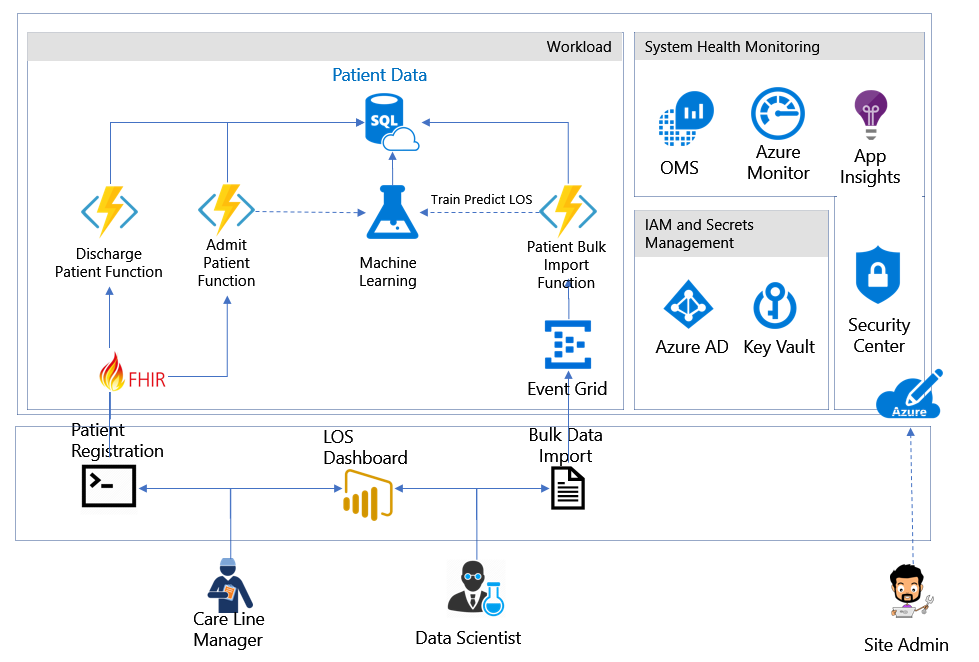

The solution started with a design and reference architecture identifying all the services, users that will interact with model,

and a threat model to understand the risk vectors and the attack surface.

Designed with security in mind, and simple to use scripts to get your hands on to the automation.

Deploying the automated scripts to Azure

The provided automation helps deploy the core solution components, that includes all the management, security components, as well as setting up the storage, and server-less Azure functions applications that will import the data into the solution. This portion of the powershell scripts is run as a global admin (note protecting your admin accounts is a blog on it's own.)

Here's two very basic things you need to do when working with your global admin accounts.

Upload the raw data, and train the ML

The solution provides a (command line) CURL automation illustrating how a scientist researcher can import sample data stored in a .csv file and train a sample machine learning model.

Load new data and review results from the trained ML

Once the system is trained the automation provides sample patient data, that can be loaded using the same CURL app to use the trained ML model to predict outcomes using the experiment.

Last the solution provides a simple PowerBi dashboard giving us a view of the data (both bulk loaded, and trained) to help visualize our experiment. All done securely!.

Outcome

The key take away in a solution like this one, is that security can be baked into the design early on, and the security measures do not need to prevent the user experience to be complex or difficult to implement.

If you like this solution, grab a clone here, and try deploying it. Since everything in the solution was released under Open Source licensing, I'd recommend you roll up your sleeves and learn from our efforts into what it takes to build a secure cloud PaaS solution for AI and storage!.

Comments

Post a Comment